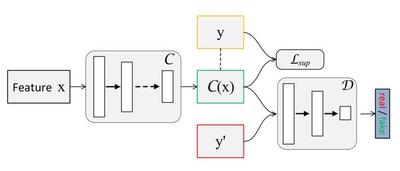

Multimedia collections usually induce multiple emotions in audiences. The data distribution of multiple emotions can be leveraged to facilitate the learning process of emotion tagging, yet has not been thoroughly explored. To address this, we propose adversarial learning to fully capture emotion distributions for emotion tagging of multimedia data. The proposed multimedia emotion tagging approach includes an emotion classifier and a discriminator. The emotion classifier predicts emotion labels of multimedia data from their content. The discriminator distinguishes the predicted emotion labels from the ground truth labels. The emotion classifier and the discriminator are trained simultaneously in competition with each other. By jointly minimizing the traditional supervised loss and maximizing the distribution similarity between the predicted emotion labels and the ground truth emotion labels, the proposed multimedia emotion tagging approach successfully captures both the mapping function between multimedia content and emotion labels as well as prior distribution in emotion labels, and thus achieves state-of-the-art performance for multiple emotion tagging, as demonstrated by the experimental results on four benchmark databases.