Occluded Facial Expression Recognition with Step-Wise Assistance from Unpaired Non-Occluded Images

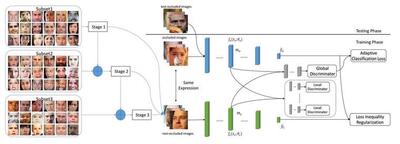

Although facial expression recognition has improved in recent years, it is still very challenging to recognize expressions from occluded facial images in the wild. Due to the lack of large-scale facial expression datasets with diversity of the type and position of occlusions, it is very difficult to learn robust occluded expression classifier directly from limited occluded images. Considering facial images without occlusions usually provide more information for facial expression recognition compared to occluded facial images, we propose a step-wise learning strategy for occluded facial expression recognition that utilizes unpaired non-occluded images as guidance in the feature and label space. Specifically, we first measure the complexity of non-occluded data using distribution density in a feature space and split data into three subsets. In this way, the occluded expression classifier can be guided by basic samples first, and subsequently leverage more meaningful and discriminative samples. Complementary adversarial learning techniques are applied in the global-level and local-level feature space throughout, forcing the distribution of the occluded features to be close to the distribution of the non-occluded features. We also take the variability of the different images’ transferability into account via adaptive classification loss. Loss inequality regularization is imposed in the label space to calibrate the output values of the occluded network. Experimental results show that our method improves performance on both synthesized occluded databases and realistic occluded databases.